Why Probability in Quantum Mechanics is Given by the Wave Function Squared

One of the most profound and mysterious principles in all of physics is the Born Rule,

named after Max Born.

(1882 — 1970)

In quantum mechanics, particles don’t have classical properties like “position”

or “momentum”;

rather, there is a wave function

that assigns a (complex) number, called the “amplitude,” to each possible measurement outcome.

The Born Rule is then very simple: it says that the

probability of obtaining any possible measurement outcome is equal to the square of the corresponding

the Born Rule is certainly correct,

as far as all of our experimental efforts have been able to discern...

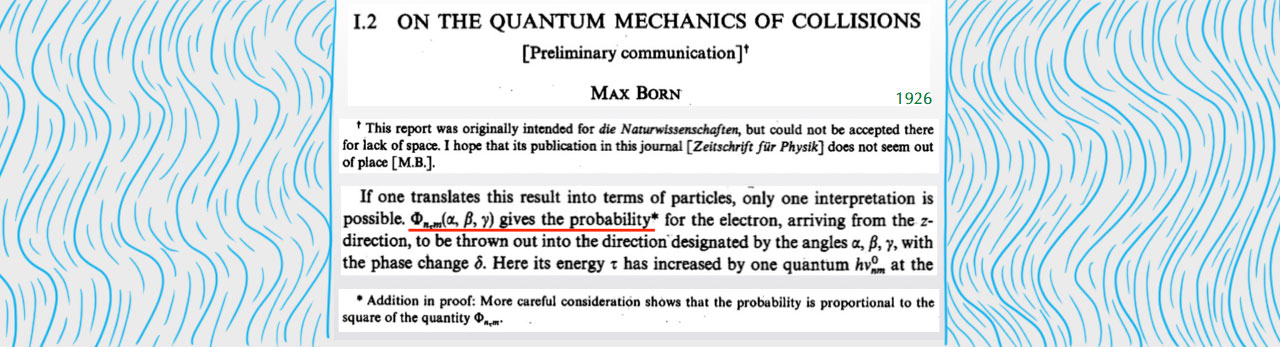

Born himself kind of stumbled onto his Rule. Here is an excerpt from his 1926 paper:

That’s right. Born’s paper was rejected at first, and when it was later accepted by another journal, he didn’t even get the Born Rule right.

At first he said the probability was equal to the amplitude, and only in an added footnote did he correct it to being the amplitude squared.

And a good thing, too, since

can be negative or even

The status of the Born Rule depends greatly on one’s preferred formulation of

When we teach quantum mechanics to

we generally give them a list of postulates that goes something like this:

Quantum states are represented by wave functions, which are vectors in a mathematical space called Hilbert space.

Wave functions evolve in time according to the Schrödinger equation.

The act of measuring a quantum system returns a number, known as the eigenvalue of the quantity being measured.

The probability of getting any particular eigenvalue is equal to the square of the amplitude for that eigenvalue.

After the measurement is performed, the wave function “collapses” to a new state in which the wave function is localized precisely on the observed eigenvalue (as opposed to being in a superposition of many different possibilities).

- each of a set of values of a parameter for which a differential equation has a nonzero solution (an eigenfunction) under given conditions.

- any number such that a given matrix minus that number times the identity matrix has zero determinant.

It’s an ungainly mess, we all agree.

You see that the

is simply postulated right there, as #4. Perhaps we can do better.

There are other formulations, and you know that my own favorite is Everettian (“Many-Worlds”)

Everettian quantum mechanics also comes with a list of postulates. Here it is:

Quantum states are represented by wave functions, which are vectors in a mathematical space called Hilbert space.

Wave functions evolve in time according to the

That’s it! Quite a bit simpler — and the two postulates are exactly the same as the first two of the textbook approach. Everett, in other words, is claiming that all the weird stuff about

in the conventional way of thinking about quantum mechanics isn’t something we need to add on; it comes out automatically from the formalism.

The trickiest thing to extract from the formalism is the Born Rule. That’s what Charles (“Chip”) Sebens and I tackled in our recent paper: Self-Locating Uncertainty and the Origin of Probability in Everettian Quantum Mechanics – Charles T. Sebens, Sean M. Carroll.

A longstanding issue in attempts to understand the Everett (Many-Worlds) approach to quantum mechanics is the origin of the Born rule: why is the probability given by the square of the amplitude? Following Vaidman, we note that observers are in a position of self-locating uncertainty during the period between the branches of the wave function splitting via decoherence and the observer registering the outcome of the measurement. In this period it is tempting to regard each branch as equiprobable, but we give new reasons why that would be inadvisable. Applying lessons from this analysis, we demonstrate (using arguments similar to those in Zurek’s envariance-based derivation) that the Born rule is the uniquely rational way of apportioning credence in Everettian quantum mechanics. In particular, we rely on a single key principle: changes purely to the environment do not affect the probabilities one ought to assign to measurement outcomes in a local subsystem. We arrive at a method for assigning probabilities in cases that involve both classical and quantum self-locating uncertainty. This method provides unique answers to quantum Sleeping Beauty problems, as well as a well-defined procedure for calculating probabilities in quantum cosmological multiverses with multiple similar observers.

Chip is a graduate student in the philosophy department at Michigan, which is great because this work lies squarely at the boundary of physics and philosophy. (I guess it is possible.) The paper itself leans more toward the philosophical side of things; if you are a physicist who just wants the equations, we have a shorter conference proceeding.

Before explaining what we did, let me first say a bit about why there’s a puzzle at all.

Let’s think about the wave function for a spin, a spin-measuring apparatus, and an environment (the rest of the world). It might initially take the form

In Everettian quantum mechanics (EQM), wave functions

α([up] ; apparatus says “up” ; environment1) + β([down] ; apparatus says “down” ; environment2)

The wave function has split into branches that don’t ever talk to each other, because the two environment states are different and will stay that way. A state like this simply arises from normal Schrödinger evolution from the state we started with.

So here is the problem. After the splitting from (1) to (2), the wave function coefficients α and β just kind of go along for the ride.

If you find yourself in the branch where the spin is up

How do you know what kind of coefficient is sitting outside the branch you are living on

If anything, shouldn’t we declare them to be equally likely (so-called “branch-counting”)

There was nothing stochastic or random about any of this process, the entire evolution was perfectly deterministic.

It’s not right to say “Before the measurement, I didn’t know which branch I was going to end up on.” You know precisely that one copy of your future self will appear on each branch. Why in the world should we be talking about

There is a result called

which says roughly that the

it’s “Whence probability?”

Perhaps the most well-known is the approach developed by

based on decision theory.

There, the approach to probability is essentially operational: given the setup of Everettian quantum mechanics, how should a rational person behave, in terms of making bets and predicting experimental outcomes, etc.?

They show that there is one unique answer, which is given by the Born Rule. In other words, the question

is sidestepped by arguing that

One of my favorites is Wojciech Zurek’s approach based on “envariance.” Rather than using words like “decision theory” and “rationality” that make physicists nervous,

Zurek claims that the underlying symmetries of quantum mechanics pick out the

But it is subject to the criticism that it doesn’t really teach us anything that we didn’t already know from Gleason’s theorem. That is, Zurek gives us more reason to think that the

Here is where Chip and I try to contribute something. We use the idea of “self-locating uncertainty,” which has been much discussed in the philosophical literature, and has been applied to quantum mechanics by

Self-locating uncertainty occurs when you know that there multiple observers in the universe who find themselves in exactly the same conditions that you are in right now –

but you don’t know which one of these observers you are. That can happen in “big universe” cosmology, where it leads to the measure problem. But it automatically happens in EQM, whether you like it or not.

Think of observing the spin of a particle, as in our example above. The steps are:

- Everything is in its starting state, before the measurement.

- The apparatus interacts with the system to be observed and becomes entangled. (“Pre-measurement.”)

- The apparatus becomes entangled with the environment, branching the wave function. (“Decoherence.”)

- The observer reads off the result of the measurement from the apparatus.

The point is that in between steps 3. and 4., the wave function of the universe has branched into two, but the observer doesn’t yet know which branch they are on.

There are two copies of the observer that are in identical states, even though they’re part of different “worlds.” That’s the moment of self-locating uncertainty. Here it is in equations, although I don’t think it’s much help.

You might say “What if I am the apparatus myself?” That is, what if I observe the outcome directly, without any intermediating macroscopic equipment?

Nice try, but no dice. That’s because decoherence happens incredibly quickly.

Even if you take the extreme case where you look at the

In that sense, probability is inevitable, even though the theory is deterministic — in the phase of uncertainty, we need to assign probabilities to finding ourselves on different branches.

So what do we do about it? As I mentioned, there’s been a lot of work on how to deal with self-locating uncertainty, i.e. how to apportion credences (degrees of belief) to different possible locations for yourself in a big universe.

One influential paper is by Adam Elga, and comes with the charming title of “Defeating Dr. Evil With Self-Locating Belief.” (Philosophers have more fun with their titles than physicists do.)

Naïvely, applying Indifference to quantum mechanics just leads to branch-counting — if you assign equal probability to every possible appearance of equivalent observers, and there are two branches, each branch should get equal probability.

But that’s a disaster; it says we should simply ignore the amplitudes entirely, rather than using the Born Rule. This bit of tension has led to some worry among philosophers who worry about such things.

Resolving this tension is perhaps the most useful thing Chip and I do in our paper. Rather than naïvely applying Indifference to quantum mechanics, we go back to the “simple assumptions” and try to derive it from scratch. We were able to pinpoint one hidden assumption that seems quite innocent, but actually does all the heavy lifting when it comes to quantum mechanics. We call it the “Epistemic Separability Principle,” or ESP for short. Here is the informal version (see paper for pedantic careful formulations):

ESP: The credence one should assign to being any one of several observers having identical experiences is independent of features of the environment that aren’t affecting the observers.

That is, the probabilities you assign to things happening in your lab, whatever they may be, should be exactly the same if we tweak the universe just a bit by moving around some rocks on a planet orbiting a star in the Andromeda galaxy. ESP simply asserts that our knowledge is separable: how we talk about what happens here is independent of what is happening far away. (Our system here can still be entangled with some system far away; under unitary evolution, changing that far-away system doesn’t change the entanglement.)

The ESP is quite a mild assumption, and to me it seems like a necessary part of being able to think of the universe as consisting of separate pieces.

If you can’t assign credences locally without knowing about the state of the whole universe, there’s no real sense in which the rest of the world is really separate from you. It is certainly implicitly used by Elga (he assumes that credences are unchanged by some hidden person tossing a coin).

With this assumption in hand, we are able to demonstrate that

Indeed, we show that you should assign equal credences to two different branches if and only if the amplitudes for each branch are precisely equal!

That’s because the proof of Indifference relies on shifting around different parts of the state of the universe and demanding that the answers to local questions not be altered; it turns out that this only works in quantum mechanics if the amplitudes are equal,

which is certainly consistent with the

See the papers for the actual argument — it’s straightforward but a little tedious. The basic idea is that you set up a situation in which more than one quantum object is measured at the same time, and you

ask what happens when you consider different objects to be “the system you will look at” versus “part of the environment.” If you want there to be a consistent way of assigning credences in all cases, you are led inevitably to equal probabilities when (and only when) the amplitudes are equal.

What if the amplitudes for the two branches are not equal?

Here we can borrow some math from Zurek. (Indeed, our argument can be thought of as a love child of Vaidman and Zurek, with Elga as midwife.) In his envariance paper, Zurek shows how to start with a case of unequal amplitudes and reduce it to the case of many more branches with equal amplitudes.

The number of these pseudo-branches you need is proportional to — wait for it —

the square of the amplitude.

Thus, you get out the full

We like this derivation in part because it treats probabilities as epistemic (statements about our knowledge of the world), not merely operational. Quantum probabilities are really credences — statements about the best degree of belief we can assign in conditions of uncertainty —

rather than statements about truly stochastic dynamics or frequencies in the limit of an infinite number of outcomes.

But these degrees of belief aren’t completely subjective in the conventional sense, either; there is a uniquely rational choice for how to assign them.

There are still puzzles to be worked out, no doubt, especially around the issues of exactly how and when branching happens, and how branching structures are best defined. (I’m off to a workshop next month to think about precisely these questions.) But these seem like relatively tractable technical challenges to me, rather than looming deal-breakers.

EQM is an incredibly simple theory that (I can now argue in good faith) makes sense and fits the data. Now it’s just a matter of convincing the rest of the world!